Table of contents

No headings in the article.

Imagine you have designed a distributed software system having 10,000 rows in your databases combinely. There is one user, who is trying to fill your 10,000 available rows at once by using automated scripts and taking down your software within seconds. Such practices are quite common in a software market where the competition is at a peak. How are you going to deal with such a problem as a software developer ? Turns out, there is a simple way to limit the access of the resources for a specific IP/user.

When we build an API, we allocate a certain number of servers to satisfy the scaling requirements, specifically the request demands. For instance, If we have a college project system, we may only allocate a couple servers to handle and process incoming traffic. Conversely, if we have a very popular API(Take Google Map for example), we will go ahead and configure a large number of servers. Now we never want a single client or IP to overwhelm our system with tons of requests, resulting in different faults arising in our distributed architecture. We also want our systems to behave in a predictable way and meet a certain agreement as mentioned often in SRS(Software Requirement Specification). In order to do so, we must control the rate of traffic coming from single clients so that it can stay within limit. Another aspect may be the cost and budget control. We never want unnecessary usage and an unexpected budget at the end of the month.

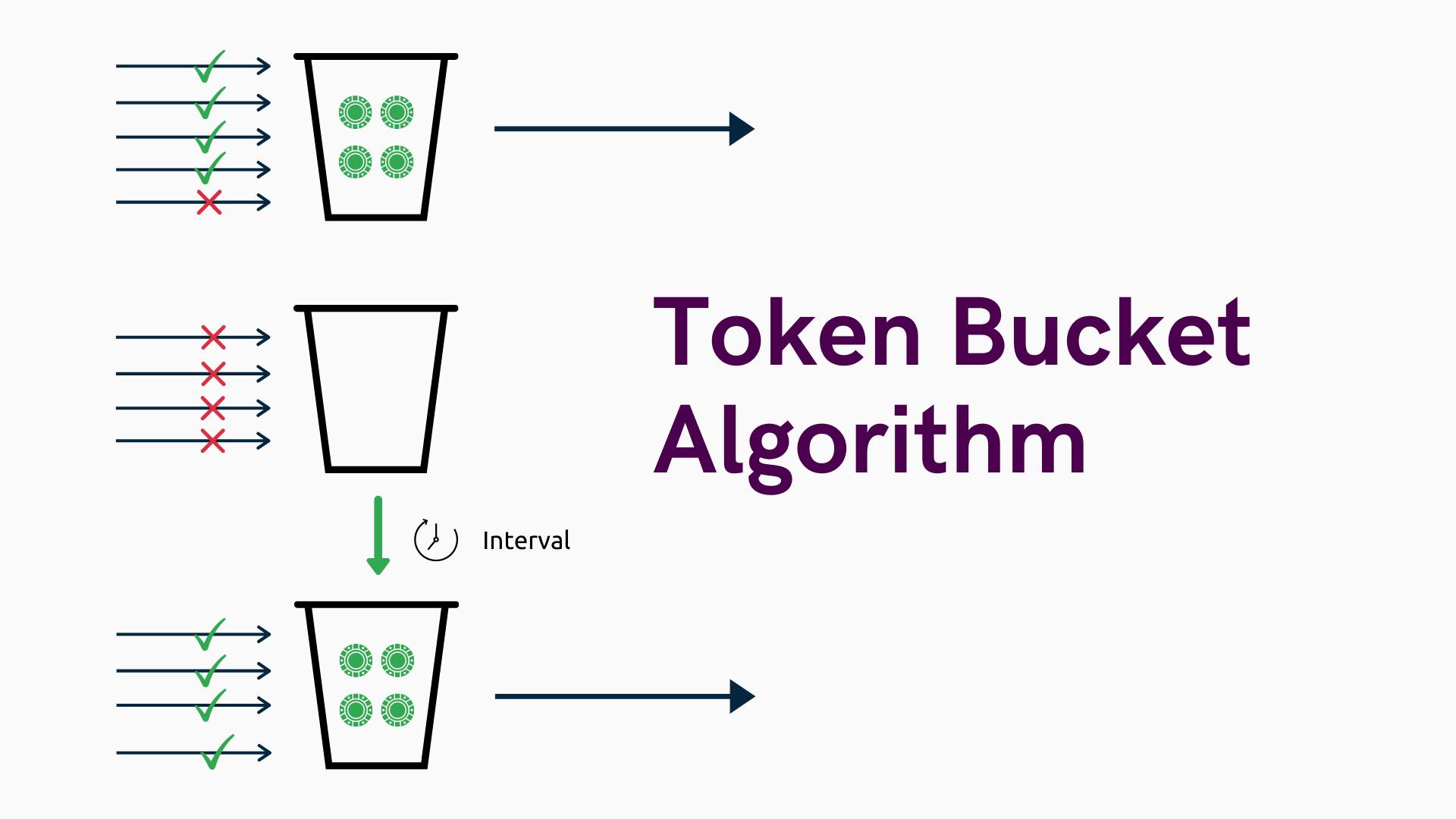

Softwares can use a variety of techniques to rate limits which simply means if you exceed a certain number of requests per certain time frame, then API will throw you a ThrottlingException. Token Bucket Algorithm is one of the famous techniques to ratelimit, which is also used in AWS API. This Algorithm has two major components, burst and refill. Burst defines the number of ‘Tokens’ that are available for an IP. Refill defines the rate in which the backend service ‘refills’ new service requests into your bucket. It is basically how fast the backend will give you more tokens to call the API.

APIs are one of the biggest assets of any business. They help the users of a website or mobile applications fulfills their tasks. As the number of users increases, the websites or the mobile application starts showing signs of performance degradation. As a result, users with better connections or faster interfaces might get a better experience than others. API throttling is an elegant solution that helps organisations to ensure fair use of their APIs. REST APIs use API limiting as a protection against DoS attacks and overloaded servers, immediately returning an HTTP 429 error and timing out, forcing the user to send a brand new query.Setting a timeout is the easiest way to limit API requests. Implementing API choking during a distributed system may be a difficult task. Once the app or service has multiple servers worldwide, the choking ought to be applied for the distributed system. The consecutive requests from an equivalent user may well be forwarded to totally different servers. The API choking logic resides on every node and desires to synchronize with one another in a time period. It may lead to inconsistency and race conditions. One way to implement API throttling in distributed systems is to use sticky sessions. In this method, all requests from a user are always serviced by a particular server. However, this solution is not well-balanced or fault tolerant. The second solution to API throttling in distributed systems are locks.

For better understanding, try the GITHUB API or LINKEDIN API once, and check how rate limiting works.

Presear Softwares dominates in building solutions that can assist you with zeroing overheads for technology and increasing mindfulness in your business. Contact us for best software development services, including android, web app and hybrid development.